AI & US- Robots recognize our emotions!

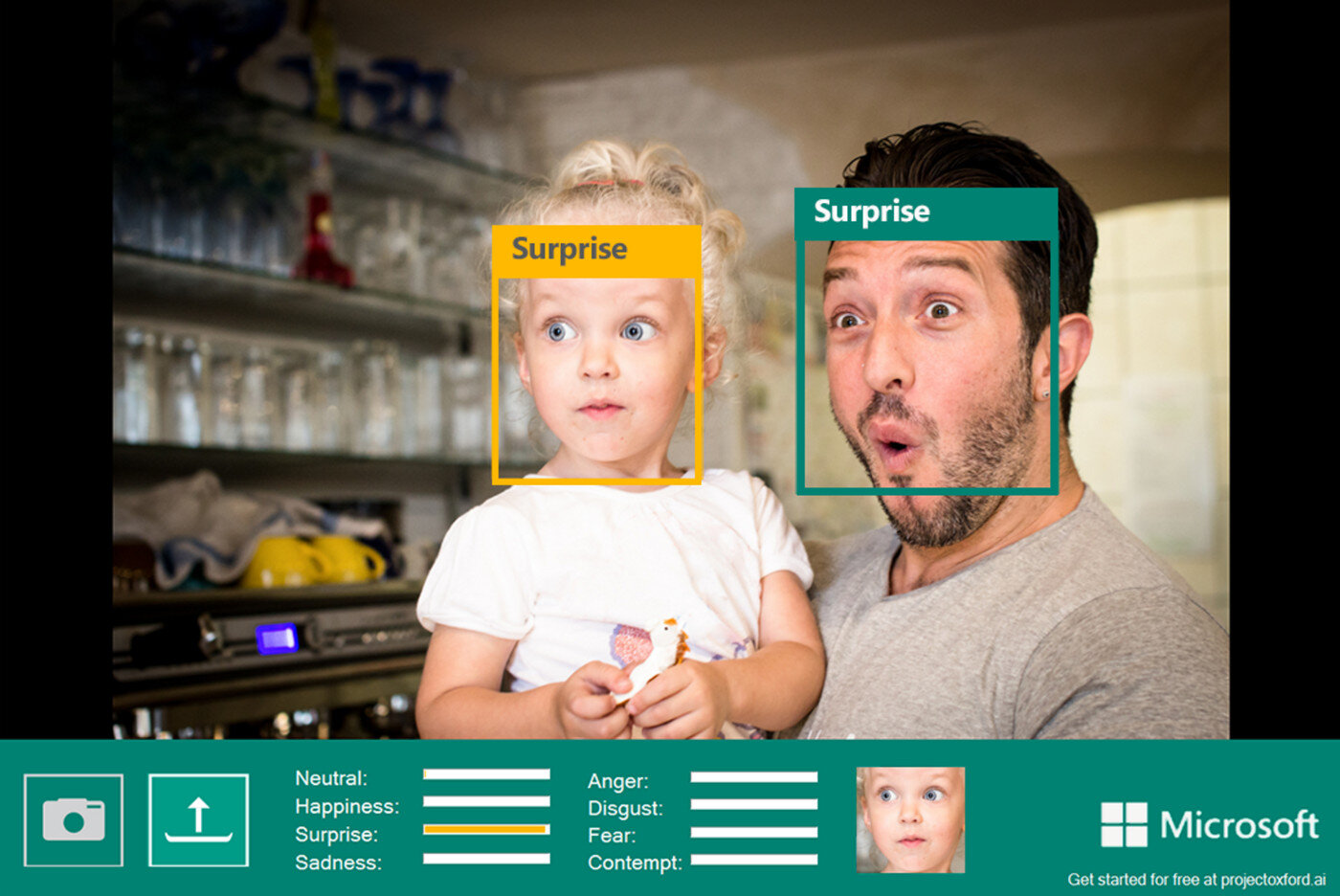

Can AI systems, in addition to recognizing cute kittens, dogs, pigs… also be trained to recognize our emotions? In addition to being artificial, is AI also emotionally intelligent? And do we actually want this kind of technology, or should we revolt? This is the second part in a series about AI & US where we learn to train and use AI classification systems in your classroom!

Previously on …

This is the second part in a series on object classification by AI systems. Part 1 can be found on this blog! So in this post we will not repeat the full theory behind these kinds of AI classification systems. For that I would like to refer to part 1 where we train an AI model to learn to distinguish dogs, pigs, bread ... from each other. There you learn in detail the different steps (data, training, prediction) and you see the possibilities and limitations of that system.

About emotion and emotion understanding

Emotion, like AI, is hard to define. In addition to natural and artificial intelligence, there is also such a thing as emotional intelligence. But what does all that mean?

Emotion

There is currently no single definition of emotion, just like for AI. Depending on the research field of a researcher, a different emphasis is often placed in the definition. Cognitive psychologists, for example, tend to emphasize the cognitive features of emotions in their definition. According to Scherer (2005), an emotion consists of five subsystems that together lead to five emotion components. Assessment, physical reactions, action tendencies, facial expressions and voice, subjective feelings

Emotion understanding

Emotion understanding is having knowledge of these five elements of emotion, recognizing them and being able to distinguish them in others. It should be clear that an AI system that is trained to recognize a facial expression and derive an emotion from it, does not know the full picture.

Possibilities!

AI systems based on recognition (not just limited to facial expressions) can be used in various applications. As:

An AI mirror that can analyze your face and breath. May be used to adjust the dose of medication from day to day based on those measurements.

AI in school laptop recognizes when a student is having a hard time with subject matter.

AI at the border / asylum that detects lies based on camera image.

Dangers!

That sounds innovative and sometimes interesting, but there are clearly dangers associated with this. In this way we can enumerate three criticisms of these forms of innovation.

Does AI understand what it sees?

This first form of criticism focuses on the level of understanding. The AI, also in this project, has learned which patterns or images belong to a certain class. So he can recognize these. But just like with language algorithms or thought experiments such as the Chinese Room Argument, we can ask ourselves to what extent recognition is equivalent to understanding.

If you want to test or trick an AI yourself, this is the place to be!

White man bias in the data

During this project we learned that an AI model needs to be trained before it can do recognition or prediction. To train you need data. These datasets are easier to obtain than you think, especially with the rise of social media. How these datasets are composed is often a big question. What we do notice, when we take a closer look at such datasets, is that they contain proportionally more data from white people, especially men. So when the AI model is trained, it will especially achieve relatively good results in the test phase when confronted with white subjects. With people of a different origin we get significantly worse results, sometimes with major consequences.

In addition, the expression of emotion is also a colored concept. A smile does not have the same connotation in all cultures and groups. For example, it has been found that some cultures physically smile up to 30% less than others.

Both on the side of the data and on the side of emotion understanding, we must therefore be vigilant for any form of prejudice or bias.

Your face is yours

Finally, we have the privacy argument. Your face and your facial expressions, your emotions, are something deeply personal. Where it used to be easy to keep your face out of a data set, today, due to the prevalence of social media and surveillance cameras, that is no longer an easy task.

Solutions!

Oplossingen, zeker voor het privacyluik, kunnen zich op een aantal niveau's bevinden, zoals:

Individuel

You can inform yourself and take into account the possibilities and limitations in your own actions and designs. For example, it is important that information about artificial intelligence and privacy is picked up by education.

Organisations

You can sign petitions such as from the organization Reclaim your Face, join privacy groups such as the Ministry of Privacy. Amnesty International also calls for action to be taken against dangerous AI applications that reinforce race-based prejudice.

Governments

Governments, such as the European Council and the European GDPR legislation, are also striving for more privacy and protection for their citizens.

But ... even governments are not without sin. For example, the Belgian police appear to use the illegal facial recognition tool of Clearview AI. A practice that the police, part of the Belgian government, have lied about several times.

Conclusions

We can get a computer / A.I. learn to classify data (images).

Importance of good training data for the A.I. and indicate potential hazards.

Emotions are layered (face, voice, body).

Emotions are culturally determined.

Privacy is important, but also at risk!

AI is rarely the miracle solution (solutionism).

Contact

Questions or in need of more information? Head on over to the contact page!